HeadGesture: Hands-Free Input Approach Leveraging Head Movements for HMD Devices

Yukang Yan,

Chun Yu,

Xin Yi,

Yuanchun Shi.

Published at

ACM IMWUT

2018

Abstract

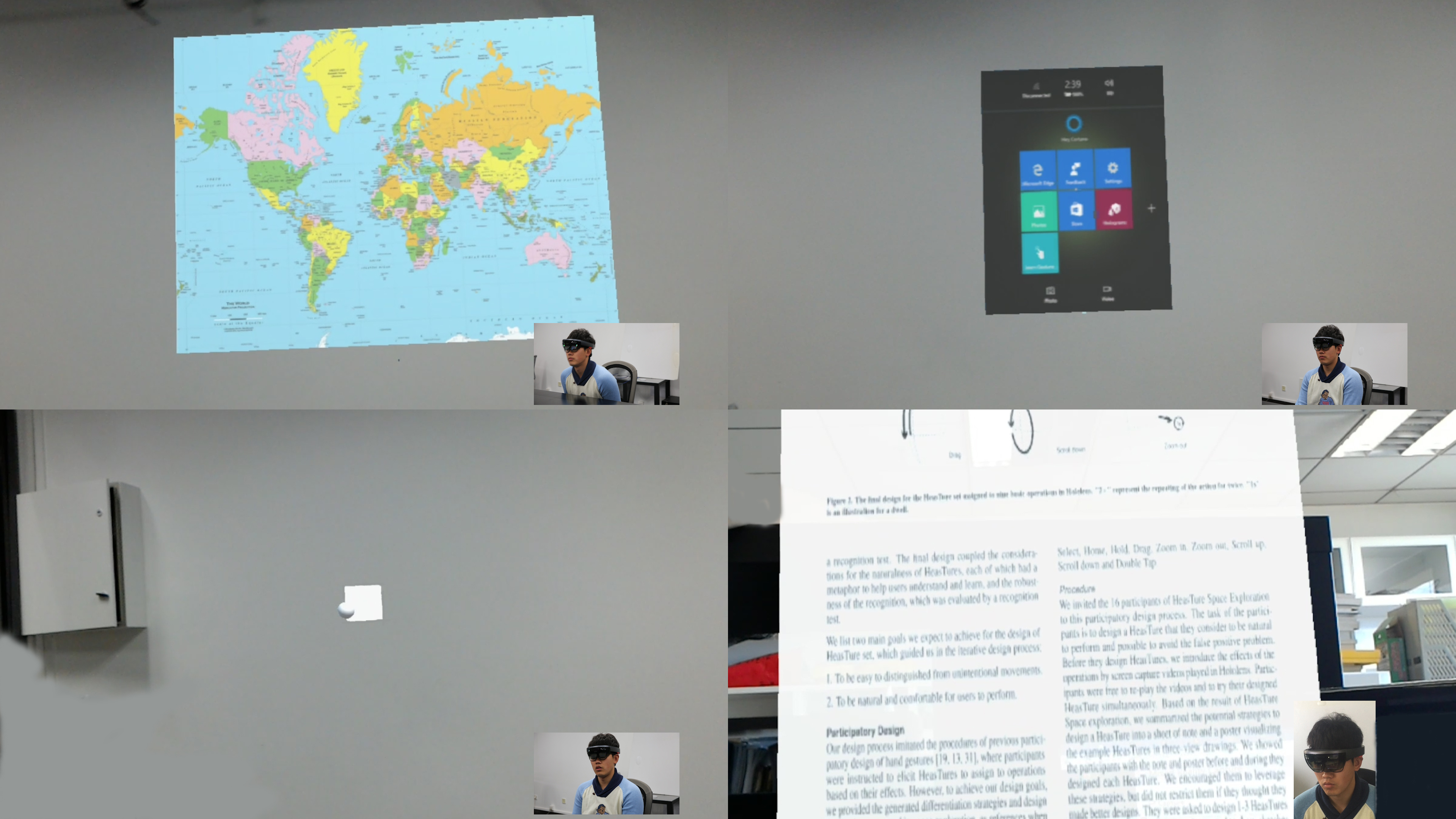

We propose HeadGesture, a hands-free input approach to interact with Head Mounted Display (HMD) devices. Using HeadGesture, users do not need to raise their arms to perform gestures or operate remote controllers in the air. Instead, they perform simple gestures with head movement to interact with the devices. In this way, users' hands are free to perform other tasks, e.g., taking notes or manipulating tools. This approach also reduces the hand occlusion of the field of view [11] and alleviates arm fatigue [7]. However, one main challenge for HeadGesture is to distinguish the defined gestures from unintentional movements. To generate intuitive gestures and address the issue of gesture recognition, we proceed through a process of Exploration - Design - Implementation - Evaluation. We first design the gesture set through experiments on gesture space exploration and gesture elicitation with users. Then, we implement algorithms to recognize the gestures, including gesture segmentation, data reformation and unification, feature extraction, and machine learning based classification. Finally, we evaluate user performance of HeadGesture in the target selection experiment and application tests. The results demonstrate that the performance of HeadGesture is comparable to mid-air hand gestures, measured by completion time. Additionally, users feel significantly less fatigue than when using hand gestures and can learn and remember the gestures easily. Based on these findings, we expect HeadGesture to be an efficient supplementary input approach for HMD devices.

Materials

Bibtex

@article{yukang20181, author = {Yan, Yukang and Yu, Chun and Yi, Xin and Shi, Yuanchun}, title = {HeadGesture: Hands-Free Input Approach Leveraging Head Movements for HMD Devices}, year = {2018}, issue_date = {December 2018}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {2}, number = {4}, url = {https://doi.org/10.1145/3287076}, doi = {10.1145/3287076}, abstract = {}, journal = {Proc. ACM Interact. Mob. Wearable Ubiquitous Technol.}, month = {dec}, articleno = {198}, numpages = {23}, keywords = {Gesture, Virtual Reality, Head Movement Interaction} }