PrivateTalk: Activating Voice Input with Hand-On-Mouth Gesture Detected by Bluetooth Earphones

Yukang Yan,

Chun Yu,

Yingtian Shi,

Minxing Xie.

Published at

UIST

2019

Abstract

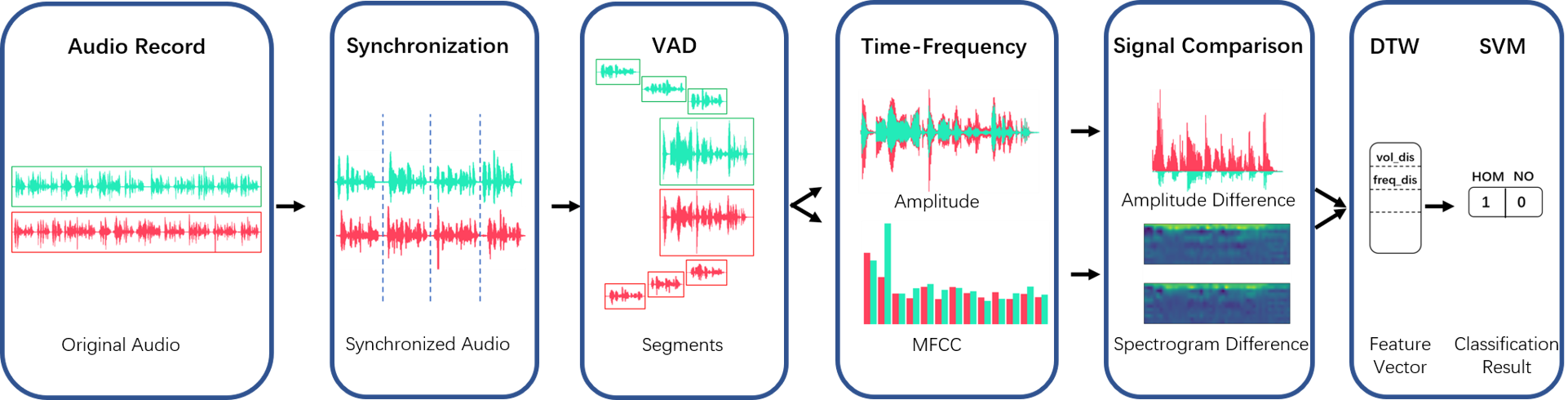

We introduce PrivateTalk, an on-body interaction technique that allows users to activate voice input by performing the Hand-On-Mouth gesture during speaking. The gesture is performed as a hand partially covering the mouth from one side. PrivateTalk provides two benefits simultaneously. First, it enhances privacy by reducing the spread of voice while also concealing the lip movements from the view of other people in the environment. Second, the simple gesture removes the need for speaking wake-up words and is more accessible than a physical/software button especially when the device is not in the user's hands. To recognize the Hand-On-Mouth gesture, we propose a novel sensing technique that leverages the difference of signals received by two Bluetooth earphones worn on the left and right ear. Our evaluation shows that the gesture can be accurately detected and users consistently like PrivateTalk and consider it intuitive and effective.

Materials

Bibtex

@inproceedings{yukang2019, author = {Yan, Yukang and Yu, Chun and Shi, Yingtian and Xie, Minxing}, title = {PrivateTalk: Activating Voice Input with Hand-On-Mouth Gesture Detected by Bluetooth Earphones}, year = {2019}, isbn = {9781450368162}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3332165.3347950}, doi = {10.1145/3332165.3347950}, abstract = {}, booktitle = {Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology}, pages = {1013–1020}, numpages = {8}, keywords = {voice input, hand gesture}, location = {New Orleans, LA, USA}, series = {UIST '19} }