EarBuddy: Enabling On-Face Interaction via Wireless Earbuds

Xuhai Xu,

Haitian Shi,

Xin Yi,

Wenjia Liu,

Yukang Yan,

Yuanchun Shi,

Alex Mariakakis,

Jennifer Mankoff,

Anind K Dey.

Published at

ACM CHI

2020

Abstract

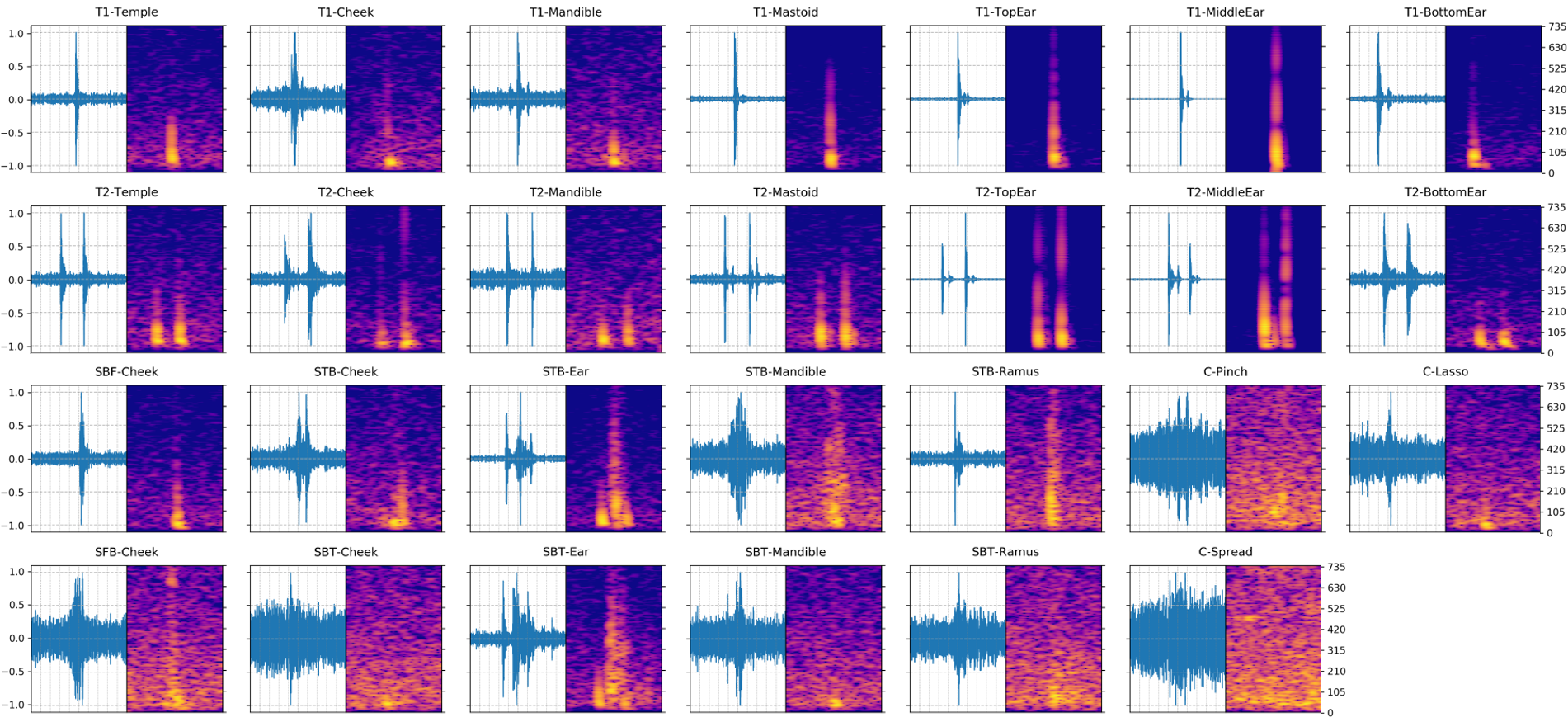

Past research regarding on-body interaction typically requires custom sensors, limiting their scalability and generalizability. We propose EarBuddy, a real-time system that leverages the microphone in commercial wireless earbuds to detect tapping and sliding gestures near the face and ears. We develop a design space to generate 27 valid gestures and conducted a user study (N=16) to select the eight gestures that were optimal for both human preference and microphone detectability. We collected a dataset on those eight gestures (N=20) and trained deep learning models for gesture detection and classification. Our optimized classifier achieved an accuracy of 95.3%. Finally, we conducted a user study (N=12) to evaluate EarBuddy's usability. Our results show that EarBuddy can facilitate novel interaction and that users feel very positively about the system. EarBuddy provides a new eyes-free, socially acceptable input method that is compatible with commercial wireless earbuds and has the potential for scalability and generalizability

Materials

Bibtex

@inproceedings{xuhai2020, author = {Xu, Xuhai and Shi, Haitian and Yi, Xin and Liu, WenJia and Yan, Yukang and Shi, Yuanchun and Mariakakis, Alex and Mankoff, Jennifer and Dey, Anind K.}, title = {EarBuddy: Enabling On-Face Interaction via Wireless Earbuds}, year = {2020}, isbn = {9781450367080}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3313831.3376836}, doi = {10.1145/3313831.3376836}, abstract = {}, booktitle = {Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems}, pages = {1–14}, numpages = {14}, keywords = {face and ear interaction, wireless earbuds, gesture recognition}, location = {Honolulu, HI, USA}, series = {CHI '20} }