FaceSight: Enabling Hand-to-Face Gesture Interaction on AR Glasses with a Downward-Facing Camera Vision

Yueting Weng,

Chun Yu,

Yingtian Shi,

Yuhang Zhao,

Yukang Yan,

Yuanchun Shi.

Published at

ACM CHI

2021

Abstract

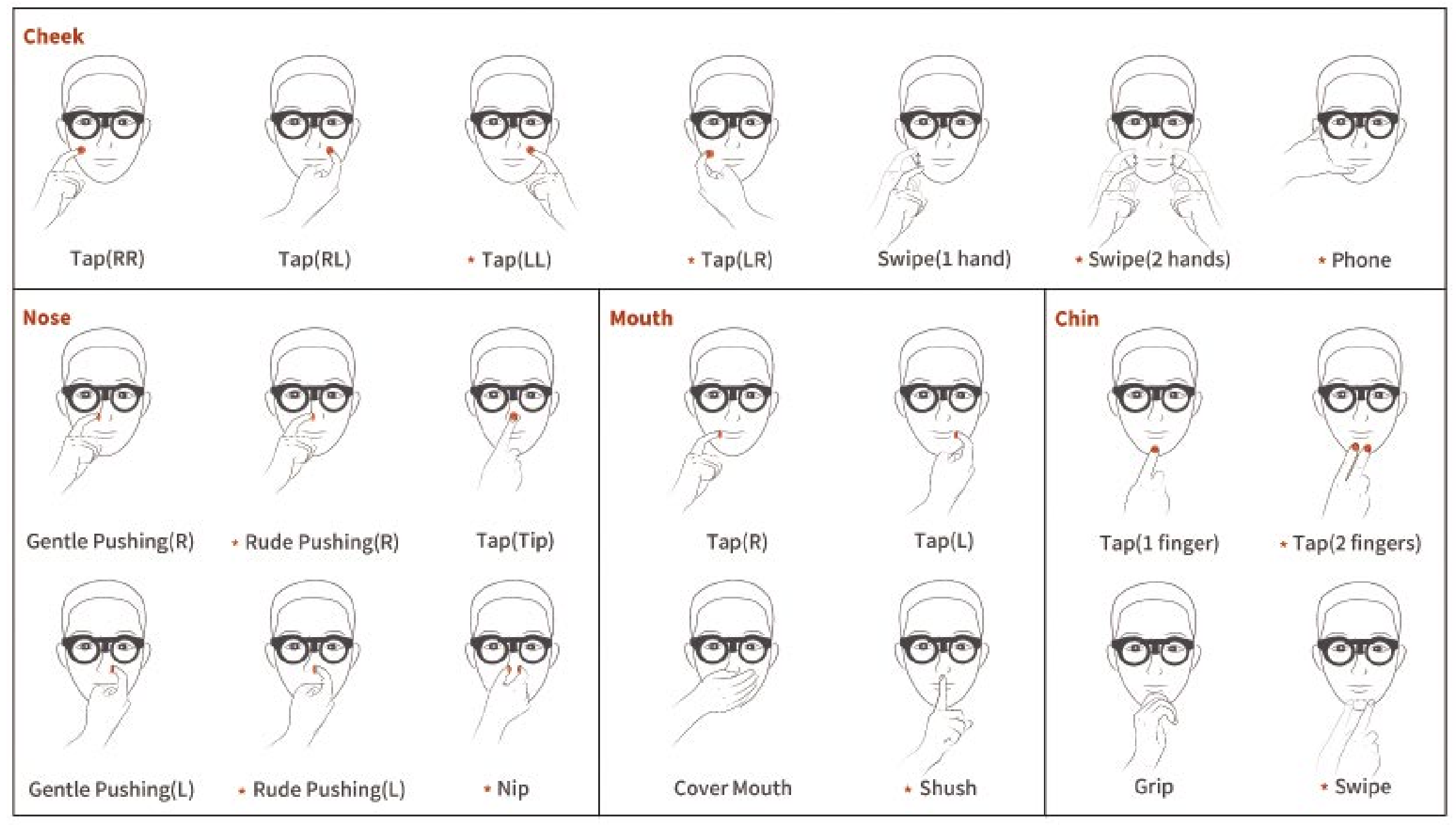

We present FaceSight, a computer vision-based hand-to-face gesture sensing technique for AR glasses. FaceSight fixes an infrared camera onto the bridge of AR glasses to provide extra sensing capability of the lower face and hand behaviors. We obtained 21 hand-to-face gestures and demonstrated the potential interaction benefits through five AR applications. We designed and implemented an algorithm pipeline that segments facial regions, detects hand-face contact (f1 score: 98.36%), and trains convolutional neural network (CNN) models to classify the hand-to-face gestures. The input features include gesture recognition, nose deformation estimation, and continuous fingertip movement. Our algorithm achieves classification accuracy of all gestures at 83.06\%, proved by the data of 10 users. Due to the compact form factor and rich gestures, we recognize FaceSight as a practical solution to augment input capability of AR glasses in the future.

Materials

Bibtex

@inproceedings{yueting2021, author = {Weng, Yueting and Yu, Chun and Shi, Yingtian and Zhao, Yuhang and Yan, Yukang and Shi, Yuanchun}, title = {FaceSight: Enabling Hand-to-Face Gesture Interaction on AR Glasses with a Downward-Facing Camera Vision}, year = {2021}, isbn = {9781450380966}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3411764.3445484}, doi = {10.1145/3411764.3445484}, abstract = {}, booktitle = {Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems}, articleno = {10}, numpages = {14}, keywords = {AR Glasses, Hand-to-Face Gestures, Computer Vision}, location = {Yokohama, Japan}, series = {CHI '21} }