ClenchClick: Hands-Free Target Selection Method Leveraging Teeth-Clench for Augmented Reality

Xiyuan Shen,

Yukang Yan,

Chun Yu,

Yuanchun Shi.

Published at

ACM IMWUT

2022

Abstract

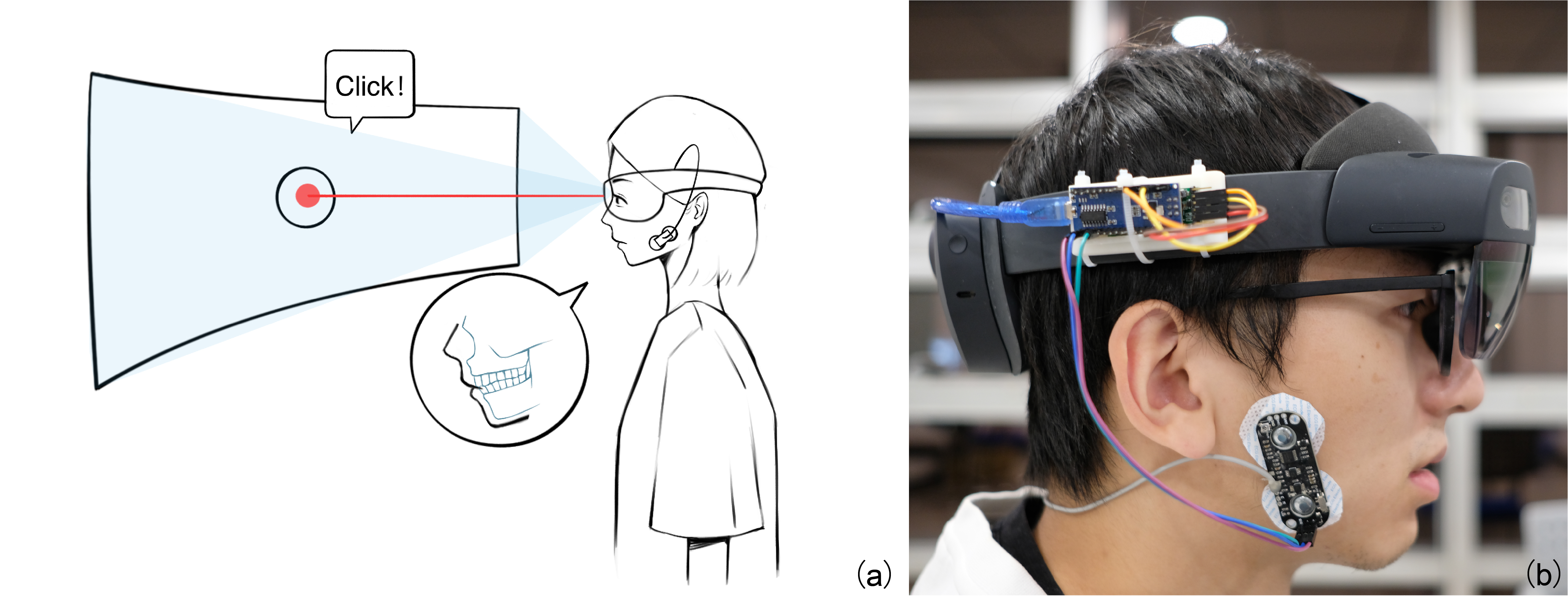

We propose to explore teeth-clenching-based target selection in Augmented Reality (AR), as the subtlety in the interaction can be beneficial to applications occupying the user's hand or that are sensitive to social norms. To support the investigation, we implemented an EMG-based teeth-clenching detection system (ClenchClick), where we adopted customized thresholds for different users. We first explored and compared the potential interaction design leveraging head movements and teeth clenching in combination. We finalized the interaction to take the form of a Point-and-Click manner with clenches as the confirmation mechanism. We evaluated the taskload and performance of ClenchClick by comparing it with two baseline methods in target selection tasks. Results showed that ClenchClick outperformed hand gestures in workload, physical load, accuracy and speed, and outperformed dwell in work load and temporal load. Lastly, through user studies, we demonstrated the advantage of ClenchClick in real-world tasks, including efficient and accurate hands-free target selection, natural and unobtrusive interaction in public, and robust head gesture input.

Materials

Bibtex

@article{10.1145/3550327, author = {Shen, Xiyuan and Yan, Yukang and Yu, Chun and Shi, Yuanchun}, title = {ClenchClick: Hands-Free Target Selection Method Leveraging Teeth-Clench for Augmented Reality}, year = {2022}, issue_date = {September 2022}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {6}, number = {3}, url = {https://doi.org/10.1145/3550327}, doi = {10.1145/3550327}, abstract = {}, journal = {Proc. ACM Interact. Mob. Wearable Ubiquitous Technol.}, month = {sep}, articleno = {139}, numpages = {26}, keywords = {augmented reality, EMG sensing, target selection, hands-free interaction} }