ConeSpeech: Exploring Directional Speech Interaction for Multi-Person Remote Communication in Virtual Reality

Yukang Yan,

Haohua Liu,

Yingtian Shi,

Jingying Wang,

Ruici Guo,

Zisu Li,

Xuhai Xu,

Chun Yu,

Yuntao Wang,

Yuanchun Shi.

Published at

IEEE VR

2023

- Best Paper Nominee Award

Abstract

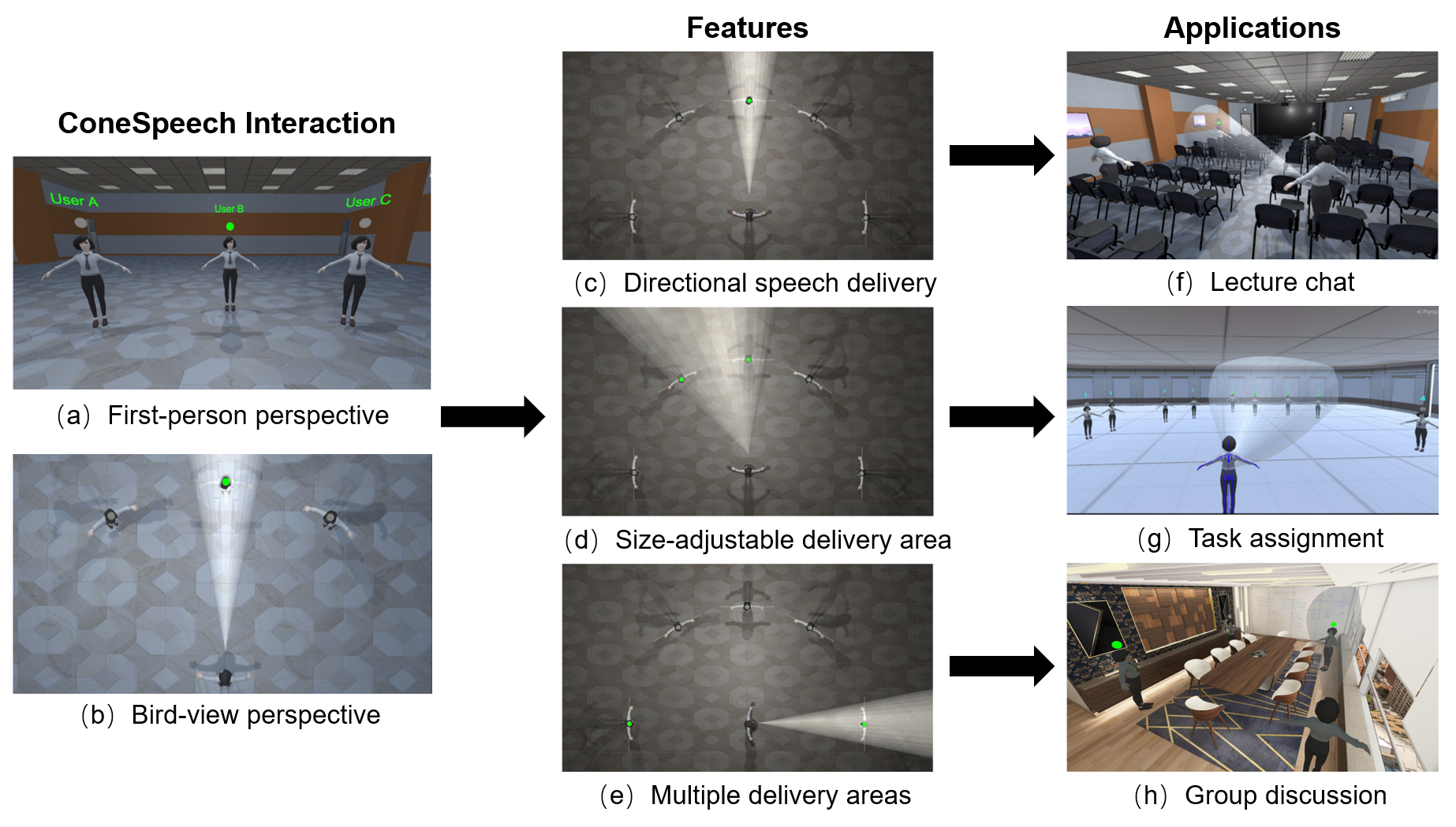

Remote communication is essential for efficient collaboration among people at different locations. We present ConeSpeech, a virtual reality (VR) based multi-user remote communication technique, which enables users to selectively speak to target listeners without distracting bystanders. With ConeSpeech, the user looks at the target listener and only in a cone-shaped area in the direction can the listeners hear the speech. This manner alleviates the disturbance to and avoids overhearing from surrounding irrelevant people. Three featured functions are supported, directional speech delivery, size-adjustable delivery range, and multiple delivery areas, to facilitate speaking to more than one listener and to listeners spatially mixed up with bystanders. We conducted a user study to determine the modality to control the cone-shaped delivery area. Then we implemented the technique and evaluated its performance in three typical multi-user communication tasks by comparing it to two baseline methods. Results show that ConeSpeech balanced the convenience and flexibility of voice communication.

Materials

Bibtex

@ARTICLE{10049667, author={Yan, Yukang and Liu, Haohua and Shi, Yingtian and Wang, Jingying and Guo, Ruici and Li, Zisu and Xu, Xuhai and Yu, Chun and Wang, Yuntao and Shi, Yuanchun}, journal={IEEE Transactions on Visualization and Computer Graphics}, title={ConeSpeech: Exploring Directional Speech Interaction for Multi-Person Remote Communication in Virtual Reality}, year={2023}, volume={29}, number={5}, pages={2647-2657}, doi={10.1109/TVCG.2023.3247085}}