AdaptiveVoice: Cognitively Adaptive Voice Interface for Driving Assistance

Shaoyue Wen,

Songming Ping,

Jialin Wang,

Hai-Ning Liang,

Xuhai Xu,

Yukang Yan.

Published at

CHI

2024

Abstract

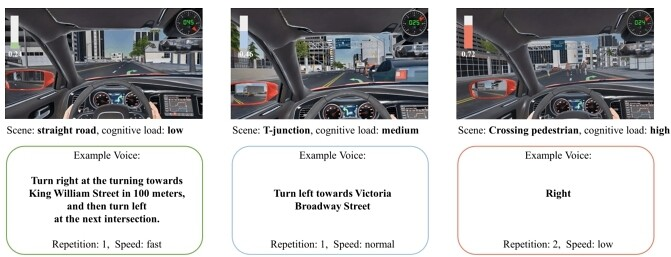

Current voice assistants present messages in a predefined format without considering users’ mental states. This paper presents an optimization-based approach to alleviate this issue which adjusts the level of details and speech speed of the voice messages according to the estimated cognitive load of the user. In the first user study (N = 12), we investigated the impact of cognitive load on user performance. The findings reveal significant differences in preferred message formats across five cognitive load levels, substantiating the need for voice message adaptation. We then implemented AdaptiveVoice, an algorithm based on combinatorial optimization to generate adaptive voice messages in real time. In the second user study (N = 30) conducted in a VR-simulated driving environment, we compare AdaptiveVoice with a fixed format baseline, with and without visual guidance on the Heads-up display (HUD). Results indicate that users benefit from AdaptiveVoice with reduced response time and improved driving performance, particularly when it is augmented with HUD.

Materials

Bibtex

@inproceedings{shaoyue24, author = {Wen, Shaoyue and Ping, Songming and Wang, Jialin and Liang, Hai-Ning and Xu, Xuhai and Yan, Yukang}, title = {AdaptiveVoice: Cognitively Adaptive Voice Interface for Driving Assistance}, year = {2024}, isbn = {9798400703300}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3613904.3642876}, doi = {10.1145/3613904.3642876}, abstract = {}, booktitle = {Proceedings of the CHI Conference on Human Factors in Computing Systems}, articleno = {253}, numpages = {18}, keywords = {Voice interface, adaptive user interface, driving assistance, workload}, location = {, Honolulu , HI , USA , }, series = {CHI '24} }